Professor: Esteban Zimanyi

Student e-mail: jose.lorencio.abril@ulb.be

This is a summary of the course Advanced Databases taught at the Université Libre de Bruxelles by Professor Esteban Zimanyi in the academic year 22/23. Most of the content of this document is adapted from the course notes by Zimanyi, [1], so I won’t be citing it all the time. Other references will be provided when used.

List of Algorithms

Traditionally, DBMS are passive, meaning that all actions on data result from explicit invocation in application programs. In contrast, active DMBS can perform actions automatically, in response to monitored events, such as updates in the database, certain points in time or defined events which are external to the database.

Integrity constraints are a well-known mechanism that has been used since the early stages of SQL to enhance integrity by imposing constraints to the data. These constraints will only allow modifications to the database that do not violate them. Also, it is common for DBMS to provide mechanisms to store procedures, in the form of precompiled packets that can be invoked by the user. These are usually caled stored procedure.

The active database technology make an abstraction of these two features: the triggers.

Definition 1.1. A trigger or, more generally, an ECA rule, consists of an event, a condition and a set of actions:

Event: indicates when the trigger must be called.

Condition: indicates the checks that must be done after the trigger is called. If the condition is fulfilled, then the set of actions is executed. Otherwise, the trigger does not perform any action.

Actions: performed when the condition is fullfilled.

Example 1.1. A conceptual trigger could be like the following:

| Event | A customer has not paid 3 invoices at the due date. |

| Condition | If the credit limit of the customer is less than 20000€. |

| Action | Cancel all curernt orders of the customer. |

There are several aspects of the semantics of an applications that can be expressed through triggers:

Static constraints: refer to referential integrity, cardinality of relations or value restrictions.

Control, business rules and workflow management rules: refer to restrictions imposed by the business requirements.

Historical data rules: define how historial data has to be treated.

Implementation of generic relationships: with triggers we can define arbitrarily complex relationships.

Derived data rules: refer to the treatment of materialized attributes, materialized views and replicated data.

Access control rules: define which users can access which content and with which permissions.

Monitoring rules: assess performance and resource usage.

The benefits of active technology are:

Simplification of application programs by embedding part of the functionality into the database using triggers.

Increased automation by the automatic execution of triggered actions.

Higher reliability of data because the checks can be more elaborate and the actions to take in each case are precisely defined.

Increased flexibility with the possibility of increasing code reuse and centralization of the data management.

Even though this basic model is simple and intuitive, each vendor proposes its own way to implement triggers, which were not in the SQL-92 standard. We are going to study Starbust triggers, Oracle triggers and DB2 triggers.

Starbust is a Relational DBMS prototype developed by IBM. In Starbust, the triggers are defined with the following definition of their components:

Event: events can refer to data-manipulation operations in SQL, i.e. INSERT, DELETE or UPDATE.

Conditions: are boolean predicates in SQL on the current state of the database after the event has occurred.

Actions: are SQL statements, rule-manipulation statements or the ROLLBACK operation.

Example 2.1. ’The salary of employees is not larger than the salary of the manager of their department.’

The easiest way to maintain this rule is to rollback any action that violates it. This restriction can be broken (if we focus on modifications on the employees only) when a new employee is inserted, when the department of an employee is modified or when the salary of an employee is updated. Thus, a trigger that solves this could have these actions as events, then it can check whether the condition is fullfilled or not. If it is not, then the action can be rollback to the previous state, in which the condition was fullfilled.

The syntax of Starbust’s rule definition is as described in Table 1. As we can see, rules have an unique name and each rule is associated with a single relation. The events are defined to be only database updates, but one rule can have several events defined on the target relation.

The same event can be used in several triggers, so one event can trigger different actions to be executed. For this not to produce an unwanted outcome, it is possible to establish the order in which different triggers must be executed, by using the PRECEDES and FOLLOWS declarations. The order defined by this operators is partial (not all triggers are comparable) and must be acyclic to avoid deadlocks.

Example 2.2. ’If the average salary of employees gets over 100, reduce the salary of all employees by 10%.’

In this case, the condition can be violated when a new employee is inserted, when an employee is deleted or when the salary is updated. Now, the action is not to rollback the operation, but to reduce the salary of every employee by 10%.

First, let’s exemplify the cases in which the condition is violated. Imagine the following initial state of the table Emp:

| Name | Sal |

| John | 50 |

| Mike | 100 |

| Sarah | 120 |

The average salary is 90, so the condition is fullfilled.

INSERT INTO Emp VALUES(’James’, 200)

The average salary would be 117,5 and the condition is not fullfilled.

DELETE FROM Emp WHERE Name=’John’

The average salary would be 110 and the condition is not fullfilled.

UPDATE Emp SET Sal=110 WHERE Name=’John’

The average salary would be 110 and the condition is not fullfilled.

The trigger could be defined as:

Note, nonetheless, that for the first example, we would get the following result:

| Name | Sal |

| John | 45 |

| Mike | 90 |

| Sarah | 108 |

| James | 180 |

Here, the mean is 105.75, still bigger than 100. We will see how to solve this issue later.

At this point, it is interesting to bring some definitions up to scene:

Definition 2.1. A transaction is a sequence of statements that is to be treated as an atomic unit of work for some aspect of the processing, i.e., a transaction either executes from beginning to end, or it does not execute at all.

Definition 2.2. A statement is a part of a transaction, which expresses an operation on the database.

Definition 2.3. En event (in a more precise way than before) is the occurrence of executing a statement, i.e., a request for executing an operation on the database.

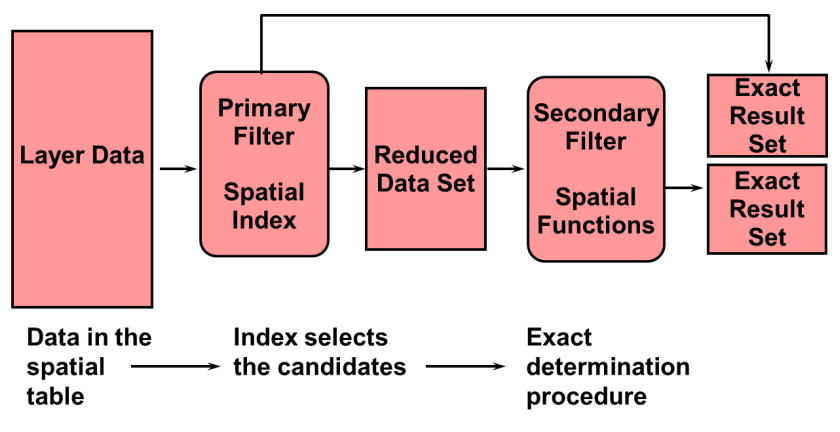

Thus, rules are triggered by the execution of operations in statements. In Starbust, rules are statement-level, meaning they are executed once per statement, even for statements that trigger events on several tuples. In addition, the execution mode is deffered. This means that all rules triggered during a transaction are placed in what is called the conflict set (i.e. the set of triggered rules). When the transaction finishes, all rules are executed in triggering order or in the defined order, if there is one. Nonetheless, if the need for a rule executing during a transaction exists, we can use the PROCESS RULES declaration, which executes all rules in the conflict set.

The algorithm for executing all rules after the transaction finished or PROCESS RULES is called is described in Algorithm 1. As we can see, rule processing basically involves 3 stages:

Activation: the event in the rule requests the execution of an operation and this is detected by the system.

Consideration: the condition of the rule is evaluated.

Execution: if the condition is fullfilled, the action in the rule is executed.

As we have seen before, rule definitions specify a partial order for execution, but several rules may have highest priority at the moment of selection. To achieve repeatability, the system maintains a total order, based on the user-defined partial order and the timestamps of the creation of the rules.

Note that the execution of the rules may trigger more rules, this could cause nontermination, if two rules call each other in a cycle. Thus, ensuring termination is one of the main problems of active-rule design.

Example 2.3. Return to the previous example. We saw how the first of the examples did not end up fullfilling the condition, but it is because we did not take into account that the rule would trigger itself because it updates the Salary of Emp. Thus, the insertion and subsequent execution of the rule triggered gave us:

| Name | Sal |

| John | 45 |

| Mike | 90 |

| Sarah | 108 |

| James | 180 |

As we have updated the salaries, the rule is triggered again. The condition would be fullfilled, 105.75>100 and the salaries would be modified again, arriving to the table as:

| Name | Sal |

| John | 41.5 |

| Mike | 81 |

| Sarah | 97.2 |

| James | 162 |

As the salaries ahve been updated again, the rule is triggered once more. Now, the mean is 95.425 < 100, so the condition is not met and the actions are not executed. The rule has terminated.

In this case, termination is ensured because all values are decreased by 10%. This implies that the mean is also decreased by 10%. Thus, no matter how high the mean is at the beginning, at some point it will go below 100, because 0.9x < x, ∀x > 0.

Remark 2.1. In general, guaranteeing termination is responsibility of the programmer is not an easy task.

A transaction causes a state transition of the database, in the form of an addition, suppression of modification of one or more tuples of the database.

Before the transaction commits, the system stores two temporary transition relations, that contain the tuples affected by the transition. This tables are:

INSERTED: for each event, it stores newly inserted tuples and the new form of tuples that have been modified.

DELETED: for each event, it stores deleted tuples and the old form of tuples that have been modified.

Example 2.4. Some simple net effects:

The sequence INSERT → UPDATE → ... → UPDATE → DELETE on a newl tuple, has null effect: the tuple was not in the database at the beginning, and it is not there at the end.

The sequence INSERT → UPDATE → ... → UPDATE on a new tuple, has the same net effect as inserting the tuple with the values given in the last update.

The sequence UPDATE → ... → UPDATE → DELETE on an existing tuple, has the same net effect as just deleting it.

Remark 2.2. Rules consider the net effect of transactions between two database states, so each tuple appears at most once in each temporary table.

Example 2.5. Let’s see the INSERTED and DELETED tables of our example. We start with the table

| Name | Sal |

| John | 50 |

| Mike | 100 |

| Sarah | 120 |

And perform UPDATE Emp SET Sal=110 WHERE Name=’John’. If we accessed the temporary tables now, we would see:

| INSERTED | |

| Name | Sal |

| John | 110 |

| DELETED | |

| Name | Sal |

| John | 50 |

Now, the rule is triggered because salary has been updated. The condition is met and the action is launched. If we accessed the temporary tables now, we would see:

| INSERTED | |

| Name | Sal |

| John | 99 |

| Mike | 90 |

| Sarah | 108 |

| DELETED | |

| Name | Sal |

| John | 50 |

| Mike | 100 |

| Sarah | 120 |

Note how the first tuple in INSERTED shows only the net effect on the tuple.

With this definitions, we can give a more precise definition of rule triggering:

A great feature of rules is that it is possible to reference the transition tables, which can be very useful in many occasions.

Example 2.6. Now, imagine we want to add the rule ’If an employee is inserted with a salary greater than 100, add the employee to the table of hifh paid employees’. This rule could be defined as:

When we insert (James, 200), the tuple is inserted and the rules SalaryControl and HighPaid are triggered. Because we have defined HighPaid to follow SalaryControl, the latter would execute earlier. Now, SalaryControl, as we saw, would trigger more instances of the same rule, which would be all executed before HighPaid. At the end, as all employees would have been modified, all of them with a salary bigger than 100 would be added to the table HighPaidEmp because of this new rule. In this case, only James fullfills the condition.

In Oracle, the term used is TRIGGER. Triggers in Oracle respond to modification operations (INSERT, DELETE, UPDATE) to a relation, just as in Starburst.

The triggers in Oracle can be defined for different granularities:

Tuple-level: the rule is triggered once for each tuple concerned by the triggering event.

Statement-level: the rule is triggered only once even if several tuples are involved.

Also, the execution mode of triggers in Oracle is immediate, meaning they are executed just after the event has ben requested, in contrast to Starburst, in which the execution mode is referred, as we saw. This allows for rules to be executed before, after or even instead of the operation of the triggering event. In 3, the definition syntax of triggers in Oracle is detailed. Some notes:

The BEFORE or AFTER commands define when the rule should execute in relation to the events that trigger it.

If FOR EACH ROW is written, then the trigger is a tuple-level trigger, and it is activated once for each tuple concerned.

This is useful if the code in the actions depends on data provided by the triggering statement or on the tuples affected.

The INSERTING, DELETING and UPDATING statements may be used in the action to check which triggering event has occurred.

OLD and NEW reference the old value of the tuple (if it is update or delete) and the new value of the tuple (if it is update or insert).

The condition consists of a simple predicate on the current tuple.

If FOR EACH ROW is not written, then the trigger is a statement-level trigger, and it is activated once for each triggering statement even if several tuples are involved or no tuple is updated.

Oracle triggers can execute actions containing arbitrary PL/SQL2 code (not just SQL as in Starburst).

Example 2.7. Row-level AFTER trigger.

Imagine we have two tables:

Inventory(Part, PartOnHand, ReorderPoint, ReorderQty)

PendingOrders(Part, Qty, Date)

We want to define a rule that whenever a PartOnHand is modified, and its new value is smaller than the ReorderPoint (i.e. we have less parts than the threshold to order more parts), we add a new record to PendingOrders as a new order for this part and the required quantity, if it is not already done. This can be done as follows:

Let’s apply the rule to see how it works. Let’s say our table Inventory is:

| Part | PartOnHand | ReorderPoint | ReorderQty |

| 1 | 200 | 150 | 100 |

| 2 | 780 | 500 | 200 |

| 3 | 450 | 400 | 120 |

If we execute the following transaction on October 10, 2000:

Then the tuple (1,100,2000-10-10) would be inserted into PendingOrders.

The algorithm for executing rules in Oracle is shown in Algorithm 2. As we can see, statement-level triggers are executed before/after anything else, and row-level triggers are executed before/after each affected tuple is modified. Note that the executions needs to take into account the priority among triggers, but only those of the same granularity (row vs statement) and type (before vs after).

The action part may activate other triggers. In that case, the execution of the current trigger is suspended and the others are considered using the same algorithm. There a maximum number of cascading triggerss, set at 32. When this maximum is reached, execution is suspended and an exception is raised.

If an exception is raised or an error occurs, the changes made by the triggering statement and the actions performed by triggers are rolled back. This means that Oracle supports partial rollback instead of transaction rollback.

This is another type of Oracle trigger, in which the action is carried out inplace of the statement that produced the activating event. These triggers are typically used to update views and they need to be carefully used, because changing one action Y for an action X can sometimes have unexpected behaviors.

Example 2.8. An Instead-of trigger:

This trigger automatically updates the manager of a department when a new manager is inserted.

In DB2, every trigger monitors a single event, and are activated immediately, BEFORE or AFTER their event. They can be defined row-level or statement-level, as in Oracle. But in this case state-transition values can be accessed in both granularities:

OLD and NEW refer to tuple granularity, as in Oracle.

OLD_TABLE and NEW_TABLE refer to table granularity, like the DELETED and INSERTED in Starburst.

DB2’s triggers cannot execute data definition nor transactional commands. They can raise errors which in turn can cause statement-level rollbacks.

The syntax is as in Table 4.

The processing is done as in Algorithm 3. Note that:

Steps 1) and 6) are not required when S if part of an user transaction.

If an error occurs during the chain processing of S, then the prior DB state is restored.

IC refers to Integrity Constraints.

Before-triggers: these are used to detect error conditions and to condition input values. They are executed entirely before the associated event and they cannot modify the DB (to avoid recursively activating more triggers).

After-triggers: these are used to embed part of the application logic in the DB. The condition is evaluated and the action is possibly executed after the event occurs. The state of the DB prior to the event can be reconstructed from transition values.

Several triggers can monitor the same event.

In this case, the order is total and entirely based on the creation time of the triggers. Row-level and statement-level triggers are intertwined in the total order.

If the action of a row-level trigger has several statements, they are all executed for one tuple before considering the next one.

Example 2.9. Imagine we have the following two tables:

| Part | ||

| PartNum | Supplier | Cost |

| 1 | Jones | 150 |

| 2 | Taylor | 500 |

| 3 | HDD | 400 |

| 4 | Jones | 800 |

| Distributor | ||

| Name | City | State |

| Jones | Palo Alto | CA |

| Taylor | Minneapolis | MN |

| HDD | Atlanta | GA |

And there is a referential integrity constraint that requires Part Suppliers to be also distributors, with HDD as a default Supplier:

Then, the following trigger is a row-level trigger that rollbacks when updating Supplier to NULL:

In SQL Server, a single trigger can run multiple actions, and it can be fired by more than one event. Also, triggers can be attached to tables or views. SQL Server does not support BEFORE-triggers, but it supports AFTER-triggers (they can be defined using the word AFTER or FOR3 ) and INSTEAD OF-triggers.

The triggers can be fired with INSERT, UPDATE and DELETE statements.

The option WITH ENCRYPTION encrypts the text of the trigger in the syscomment table.

Finally, the option NOT FOR REPLICATION ensures that the trigger is not executed when a replication process modifies the table to which the trigger is attached.

The syntax is shown in Table 5.

INSTEAD OF-triggers: are defined on a table or a view. Triggers defined on a view extend the types of updates that a view support by default. Only one per triggering action is allowed on a table or view. Note that views can be defined on other views, and each of them can have its own INSTEAD OF-triggers.

AFTER-triggers: are defined on a table. Modifications to views in which the table data is modified in response, will fire the AFTER-triggers of the table. More than one is allowed on a table. The order of execution can be defined using the sp_settriggerorder procedure. All other triggers applied to a table execute in random order.

Both clases of triggers can be applied to a table.

If both trigger classes and constraints are defined for a table, the INSTEAD OF-trigger fires first. Then, constraints are processed and finally AFTER-triggers are fired.

If constraints are violated, INSTEAD OF-trigger’s actions are rolled back.

AFTER-triggers do not execute if constraints are violated or if some other event causes the table modification to fail.

As stored procedures, triggers can be nested up to 32 levels deep and fired recursively.

Two transition tables are available: INSERTED and DELETED, which are as in Starburst.

The IF UPDATE(<column-name> clause determines whether an INSERT or UPDATE event ocurred to the column.

The COLUMNS_UPDATE() clause returns a bit pattern indicating fhich of the tested columns were isnerted or updated.

The @@ROWCOUNT function returns the number of rows affected by the previous Transact-SQL statement in the trigger.

A trigger fires even if no rows are affected by the event. The RETURN command can be used to exit the trigger transparently when this happens.

The RAISERROR command is used to display error messages.

There are some Transact-SQL statements that are not allowd in triggers:

ALTER, CREATE, DROP, RESTORE and LOAD DATABASE.

LOAD and RESTORE LOG.

DISK RESIZE and DISK INIT.

RECONFIGURE.

If in a trigger’s code it is needed to assign variables, then SET NOCOUNT ON must be included in the trigger code, disallowing the messages stating how many tuples were modified in each operation.

The INSTEAD OF DELETE and INSTEAD OF UPDATE triggers cannot be defined on tables that have a correspoonding ON DELETE or ON UPDATE cascading referential integrity defined.

Triggers cannot be created on a temporary or system table, but they can be referenced inside other triggers.

SQL Server enables to enable or disable nested and recursive triggers:

Nested trigger option: determines whether a trigger can be executed in cascade. There is a limit of 32 nested trigger operations. It can be set with sp_configure ’nested triggers’, 1 | 0.

Recursive trigger option: causes triggers to be re-fired when the trigger modifies the same table as it is attached to: the neste trigger option must be set to true. This option can be set with sp_dboption ’<db-name>’, ’recursive triggers’, ’TRUE’ | ’FALSE’.

Note that recursion can be direct if a trigger activates another instance of itself or indirect if the activation sequence is T1 → T2 → T1. The recursive trigger option only copes with the direct recursion, the indirect kind is dealt with the nested trigger option.

Trigger management includes the task of altering, renaming, viewing, dropping and disabling triggers:

Triggers can be modified with the ALTER TRIGGER statement, in which the new definition is provided.

Triggers can be renamed with the sp_rename system stored procedure as

sp_rename @objname = <old-name>, @newname = <new-name>

Triggers can be viewed by querying system tables or by using the sp_helptrigger and sp_helptext system stored procedures as

sp_helptrigger @tabname = <table-name>

sp_helptext @objname = <trigger-name>

Triggers can be deleted with the DROP TRIGGER statement.

Triggers can be enable and disable using the ENABLE TRIGGER and DISABLE TRIGGER clauses of the ALTER TABLE statement.

Example 2.10. Let’s work with a database with the following tables:

Books(TitleID, Title, Publisher, PubDate, Edition, Cost, QtySold)

Orders(OrderId, CustomerId, Amount, OrderDate)

BookOrders(OrderID, TitleId, Qty)

Here, Books.QtySold is a derived attribute which keeps track of how many copies of the book has been sold. We can make this updates automatic with the use of the following trigger:

When there is an insertion in BookOrders, the trigger fires and adds the corresponding quantity.

When there is a deletion, the trigger fires and subtracts the corresponding quantity.

An update creates both tables, so we would add and subtract to cope with the modification.

Rules provide programmers with an effective tool to support both internal applications and external applications:

Internal applications: rules support function provided by specific subsystems in passive DBSMs, such as the management of IC, derived data, replicated data, version maintenance,... Rules can usually be declaratively specified, generated by the system and hidden to the user.

External applications: these refer to the application of business rules to the data stored. Rules allow to perform computations that would usually need to be expressed in application code. In addition, rules provide many times a natural way to model reactive behavior of the data, as rules respond to external events and perform action in consequence. This approach becomes specially interested when rules express central policies, i.e., knowledge common to applications, centralizing the effort and reducing the cost.

Some examples of applications that can benefit from active technology and business rules are:

Monitoring access to a building and reacting to abnormal circumstances.

Watching evolution of share values on stock market and triggering trading actions.

Managing inventory to follow stock variations.

Managing a netwrok to for energy distribution.

Airway assignment in air traffic control.

As can be seen from these examples, a frequent case of application-specific rules are alterters, whose actions signal certain conditions that occur with ot without changing the database.

The integrity of a database refers to the consistency and conformity of the data with the database schema and its constraints. Thus, an integrity constraint is any assertion on the schema which is not defined in the data-structure aprt of the schema. Constraints declaratively specify conditions to be satisfied by the data at all times, so checking for integrity violations is done for every update of the state of the database.

Integrity constraints can be static if the predicates are evaluated on database states or dynamic if the predicates are evaluated on state transitions. They can also be classified as built-in if they are defined by special DDL (Data Definition Language) constructs (such as keys, nonnull values,...) or adhoc, which are arbitrarily complex domain-dependent constraints.

In practice, integrity maintenance is achieved through:

DBMS checks built-in constraint with automatically generated triggers.

DBMS supports limited forms of adhoc constraints.

The remaining constraints are implemented as active rules (triggers).

The process of rule generation may be partially automated:

The possible causes of violation are the events for the activation of the rule.

The declarative formulation of the constraint is the rule condition.

To avoid or eliminate the violation, an action is taken. The simplest approach is to rollback the transaction, this is done by abort rules, in contrast, the richer approach provides a domain-dependent corrective action, via repair rules.

Thus:

Abort rules check that integrity is not violation and prevent the execution of an operation which would cause the violation of the integrity by means of the ROLLBACK command.

Repair rules are more sophisticated than abort rules, because they make use of application-domain semantics to define a set of actions that restore integrity

Example 3.1. Let’s do a referential integrity example in Starburst:

We have relations Emp(EmpNo,DeptNo) and Dept(DNo). We have the regerential integrity condition

![Emp [DeptN o] ⊂ Dept[DN o],](summary0x.png)

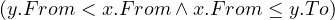

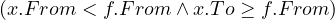

so the possible violations can come from an INSERT into Emp, a DELETE from Dept, and UPDATE of Emp[DeptNo] and an update of Dept[Dno]. The condition on tuples of Emp for not violating the constraint is:

Its denial form, so the constraint is violated is:

Thus, we can create abort rules as:

Note that one rule is neccessary for each relation.

Note also that the defined rules are inneficient, because the computation of the condition checks the whole database. Rules can assume that the constraint is verified in the initial state, so it suffices to compute the condition relative to transition tables.

Now, we are defining a repair rule that:

If an employee is inserted with a wrong value of DeptNo, it is set to NULL.

If the DeptNo of an employee is updated with a wrong value of DeptNo, it is set to 99.

If a department is deleted or its DNo is updated, then all employees from this department are deleted.

A view can be seen as a query on the DB which returns a relation or a class that can be used as any other relation or class. A derived attribute is an attribute that can be computed from other attributes in the DB. Both a view and a derived attribute can be expressed with declarative query language or deductive rules. There are two strategies for derived data:

Virtually supported: their content is computed on demand.

Materialized: their content is stored in the database, and it must be recomputed whenever the source of data is changed.

When an application queries a view, a rule is triggered on the request and the action substitutes and evaluates the view definition. It requires an event, triggered by queries, and an INSTEAD OF clause in rule language.

There exist two basic strategies:

Refresh: recompute the view from scratch after each update of the source data.

Incremental: compute changes to the view from changes in the source relations, using positive and negative deltas (a delta shows the changes experienced in the database. INSERTED and DELETED are one way to implement deltas).

The rule generation can be automated. Refresh rules are simple, but can be very inefficient. On the other hand, incremental rules depend on the structure of derivation rules, and can be complex.

Example 3.2. Imagine we have the following view definition:

So this view holds are departments in which some employee earns more than 50k€ a year. This view can change whenever an employee is inserted or deleted, its department is changed or is salary is changed; and whenever a department is inserted or deleted, or its Dno is updated.

A refresh rule defined in Starburst to handle this changes is:

As we can see, all elements from the view are deleted, and the view is recomputed entirely. The incremental approach is more complex. As an example, let’s define the rule for the case of Insert Dept:

Replication consists on storing several copies of the same information. This is a common practice in distributed databases. Keeping fully synchronized copies is usually very costly and unnecessary, so it is common to use aynchronous techniques to propagate changes between nodes.

Assymmetric replication: in this case there exists a primary copy, in which changes are performed, and several secondary copies, which are read only and are updated asynchronously. The capture module monitors changes made by applications to the primary copy, and the application module propagates these changes to the secondary copies.

Symmetric replication: all copies accept updates asynchronously and each of them has a capture and an application modules. It is needed to be careful, because simultaneous updates may cause loss of consistency.

Example 3.3. An example of capturing changes into deltas in Starburst:

The deltas are applied to the copies with a predefined policy, e.g. once very hour.

Active rules can impose a central consistent behavior independent of the transactions that cause their execution.

Active rules enforce data management policies that no transaction can violate.

Activities redundantly coded in several applications programs with passive DBMSs can be abstracted in a single version as a rule in an active DBMS.

Data management policies can evolve by just modifying the rules on the database, instead of the application programs (knowledge independence).

Rule organization and content are often hard to control and to specify declaratively (i.e. the rules are hard to code!).

Understanding active rules can be difficult, because they can react to intricate event sequences and the outcome of rule processing can depend on the order of the event ocurrences and the rule scheduling, which can be hard to analyze in complex systems.

There are no easy-to-use nor one-fits-all techniques for designing, debugging, verifying and monitoring rules.

This is an example of an application modeled with active rules, covering the business process:

’Management of the Italian electrical power distribution network.’

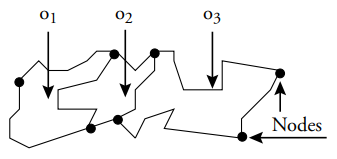

The operational network is a forest of trees, connecting power distributors to users. The operating conditions are monitored constantly with frequent reconfigurations: the structure of the network is dynamic. The topology is modified less frequently (we can consider it static). The objective is to transfer the exact power from distributors to users through nodes and directed branches connecting pairs of nodes.

In this scenario, active rules are used to respond to input transactions asking for:

Reconfigurations due to new users.

Changes in their required power.

Changes in the assignment of wires.

The schema of the database is:

BranchIn, Power) foreign key (BranchIn) References Branch

FromNode, ToNode, Power)

BranchIn, Power) foreign key (BranchIn) References Branch

Power, MaxPower)

BranchId, WireType, Power) foreign key (BranchId) references Branch foreign key (WirteType) references WireType

MaxPower)

The network is composed of sites anc onnections between pairs of sites:

Sites comprise:

Power stations: distributors where power is generated and fed into the network.

Intermediate nodes: nodes where power is transferred to be redistributed across the network.

Final users of electrical power.

Connections are called branches:

class Branch describes all connections between pairs of sites.

Several Wires are placed along the branches.

Wires are made of a given WireType, each type carrying a maximum power.

Branches can be dinamically added or dropeed to the network.

The business rules are the following:

Several user requests are gathered in a transaction.

If the power requested on wires excees the maximum power of the wire type, rules change or add wires in the relevant branches.

Rules propagate changes up in the tree, adapting the network to new user needs.

A transaction fails if the maximum power requested from some distributor exceeds the maximum power available at the distributor (in that case, the static network needs to be redesigned, but this is out of our scope).

To avoid unnecessary rollbacks, rules propagate reductions of power first, then increases of power. This requires setting the order in which the triggers execute4 .

A new user is connecting to a node with the following procedure:

The node to which a user is connected is determined by an external application: usually its closest node. ’WT1’ is the basic wire type. nextUserId, nextBranchId and nextWireId procedures are used to obtain the next identifier of a user, branch or wire.

If a user requires less power, this change needs to be propagated to its input branch:

If a node require less power, propagate the change to its input branch:

If a branch connected to a node requires less power, propagate the change to its input node.

If a branch connected to a distributor requires less power, propagate the change to the distributor.

If a user requires more power, propagate the change to its input branch.

If a node require more power, propagate the change to its input branch:

If a branch connected to a node requires more power, propagate the change to its input node.

If a branch connected to a distributor requires more power, propagate the change to the distributor.

If the power requested from a distributor exceeds its maximum, rollback the entire transaction.

If the power of a branch is changed, distributes the change equally on its wires.

If the power on a wire goes above the allowed threshold, change the wire type.

If there is no suitable wire type, add another wire to the branch.

Relational DBMSs are too rigid for Big Data scenarios, and not the best option for storing unstructured data. The one-size-fits-all approach is no longer valid in many scenarios and RDBMSs are hard to scale for billions of rows, because data structures used in RDBMSs are optimized for systems with small amounts of memory.

NoSQL technologies do not use the relational model for storing data nor retrieving it. Also, they don’t generally have the concept of schema, so fields can be added to any record as desired, without control. These characteristics provide ease to run on clusters as well as an increased scaling capability, with horizontal scalability in mind. But these gains are not free: there is a trade-off in which the traditional concept of consistency gets harmed. For example, ACID (Atomic, Consistent, Isolated, Durable) transactions are not fully supported most of the times.

There are several types of NoSQL databases, such as Key-Value stores, Column stores, Document databases,... We are going to focus on Graph Databases.

Definition 4.1. The consistency of a database is fullfilled when all updates happen equally for all users of the database.

The availability guarantees that every request receives a response, whether it succeeded or failed.

The partition tolerance means that the system is able to operate despite arbitrary message lost or failure of part of the system.

A database has, ideally, this three properties fullfilled. But the CAP theorem ensures that this is impossible:

Remark 4.1. If the system needs to be distributed, then partition tolerance is a must. So, in practice, there is a decision to choose between fullfilling consistency or availability for distributed systems.

Remark 4.2. Nonetheless, even the perfect form of the three properties cannot be guaranteed at once, it is possible to provide fairly good levels of the one that is not optimal.

With fewer replicas in the system, R/W operations complete more quickly, improving latency.

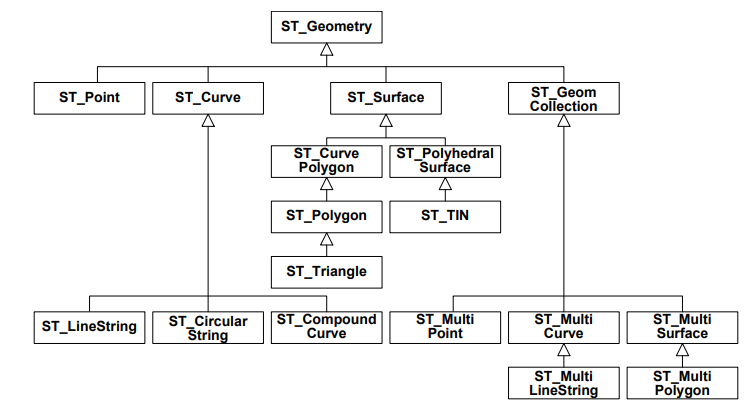

In graph databases, the data and/or the schema are represented by graphs, or by data structures that generalize the notion of graph: hypergraphs.

The integrity constraints enforce data consistency. Constraints can be grouped in: schema-instance consistency, identity and referencial integrity, and functional and inclusion dependencies.

The data manipulation is expressed by graph transfromations, or by operations whose main primitives are on graph features, like paths, neighborhoods, subgraphs, connectivity and graph statistics.

RDF allows to express facts, such as ’Ana is the mother of Julia.’, but we’d like to be able to express more generic knowledge, like ’If somebody has a daughter, then that person is a parent.’. This kind of knowledge is called schema knowledge. The RDF schema allows us to do some schema knowledge modeling, and the Ontology Web Language (OWL) gives even more expressivity.

A class is a set of things or resources. In RDF, everything is a resource, that belongs to a class (or several classes). The classes can be arranged in hierarchies. Every resource is a member of the class rdfs:Class.

The different resources are labelled with:

rdfs:Resource: class of all resources.

rdf:Property: class of all properties.

rdfs:XMLLiteral: class of XML resources.

rdfs:Literal: each datatype is a subclass.

rdfs:Bag, rdf:Alt, rdf:Seq, rdfs:Container, rdf:List, rdf:nil, rdfs:ContainerMembershipProperty.

rdfs:Datatype: class of all datatypes.

rdfs:Statement.

In RDF, there exists implicit knowledge, which can be inferred using deduction. This knowledge doesn’t need to be stated explicitly: which statements are logical consequences from others is governed by the formal semantics.

Example 4.2. If a RDF document contains ’u rdf:type ex:Textbook’ and ’ex:Textbook rdfs:subClassOf ex:Book’, then it is deduced that ’u rdf:type ex:Book’.

As can be seen, RDF are usually stored in triple stores or quad stores, which are a relational DB. This means that it is usually not implemented natively as a graph database, which makes it harder to do inference. It is more usually used as a metadata storage.

This model is simpler: in this case, the model is a graph, where nodes and edges are annotated with properties. It is schema-less, meaning there is not an underlying schema to which the data has to adhere. Inference is not performed.

There are several types of relationships supported by graph databases:

Attributes: properties that can be uni- or multi- valued.

Entities: groups of real-world objects.

Neighborhood relations: structures to represents neighborhoods of an entity.

Standard abstractions: part-of, composed-by, n-ary associations.

Derivation and inheritance: subclasses and superclasses, relations of instantiations.

Nested relations: recursively specified relations.

The abstract data type used is a graph with properties, which is a 4-tuple G =  such that:

such that:

V is a finite set of nodes.

Σ is a set of labels.

E ⊂ V × V is a set of edges representing labelled binary relationships between elements in V .

L is a function, L : V ×V → 2Σ, meaning that each edge can by annotated with zero or more labels from Σ.

The basic operations defined over a graph are:

AddNode : adds node x to G.

: adds node x to G.

DeleteNode : deletes x from G.

: deletes x from G.

Adjacent : tests if there is an edge from x to y in G, i.e., if

: tests if there is an edge from x to y in G, i.e., if  ∈ E.

∈ E.

Neighbors : returns all nodes y such that

: returns all nodes y such that  ∈ E.

∈ E.

AdjacentEdges : returns the set of lables of edges going from x to y.

: returns the set of lables of edges going from x to y.

Add : adds an edge between x and y with label l.

: adds an edge between x and y with label l.

Delete : deletes and edge between x and y with label l.

: deletes and edge between x and y with label l.

Reach : tests if there is a path from x to y. A path between x and y is a subset of nodes z1,...,zn

such that

: tests if there is a path from x to y. A path between x and y is a subset of nodes z1,...,zn

such that  ,

, ∈ E and

∈ E and  ∈ E for all i = 1,...,n - 1. The length of the path is how

many edges there are from x to y.

∈ E for all i = 1,...,n - 1. The length of the path is how

many edges there are from x to y.

Path : return a shorthest path from x to y.

: return a shorthest path from x to y.

2 - hop : return the set of nodes that can be reached from x using paths of length 2.

: return the set of nodes that can be reached from x using paths of length 2.

n - hop : returns the set of ndoes that can be reached from x using paths of length n.

: returns the set of ndoes that can be reached from x using paths of length n.

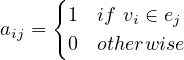

The notion of graph can be generalized by that of hypergraph, which is a pair H =  , where V is a set of nodes, and

E ⊂ 2V is a set of non-empty subsets of V , called hyperedges. If V =

, where V is a set of nodes, and

E ⊂ 2V is a set of non-empty subsets of V , called hyperedges. If V =  and E =

and E =  , we can define

the incidence matrix of H as the matrix A =

, we can define

the incidence matrix of H as the matrix A =  n×m where

n×m where

In this case, this is an undirected hypergraph. A directed hypergraph is defined similarly by H =  where in this case E ⊂ 2V × 2V , meaning that the nodes in the left set are connected to the nodes of the right

one.

where in this case E ⊂ 2V × 2V , meaning that the nodes in the left set are connected to the nodes of the right

one.

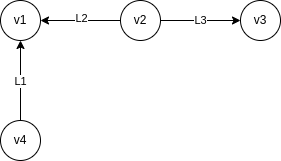

In an adjacency list, we maintain an array with as many cells as there are nodes in the graph, and:

For each node, maintain a list of neighbors.

If the graph is directed, the list is only containing outgoing nodes.

This way it is very cheap to obtain neighbors of a node, but it is not suitable for checking if there is an edge between two nodes.

Is modelled with the following adjacency list:

| v1 | |

| v2 | {(v1,{L2}),(v3,{L4})} |

| v3 | |

| v4 | {(v1,{L1})} |

In this case, we maintain two arrays, one with as many cells as nodes, and another one with as many different edges there are in the graph:

Vertices and edges are stored as records of objects.

Each vertex stores incident edges, labeled as source if the edge goes out, or destination if it goes in.

Each edge stores incident nodes.

Example 4.4. Now, the graph of the previous example is modeled as:

| v1 | {(dest,L2),(dest,L1)} |

| v2 | {(source,L2),(source,L3)} |

| v3 | {(dest,L3)} |

| v4 | {(source,L1)} |

| L1 | (V4,V1) |

| L2 | (V2,V1) |

| L3 | (V2,V3) |

Some properties:

Storage is O .

.

Adjacent is O

is O , we have to check at most all edges.

, we have to check at most all edges.

Neighbors is O

is O , we go to node x and for each edge marked as source, we visit it and return

the correspondant destination. At most E checks.

, we go to node x and for each edge marked as source, we visit it and return

the correspondant destination. At most E checks.

AdjacentEdges is again O

is again O .

.

Add is O

is O , as well as delete Delete

, as well as delete Delete .

.

In this case, we maintain a matrix of size n × n, where n is the number of nodes:

It is a bidimensional graph representation.

Rows represents source nodes.

Columnds represent destination nodes.

Each non-null entry represents that there is an edge from the source node to the destination node.

Properties:

The storage is O .

.

Adjacent is O

is O , we have to check cell

, we have to check cell  .

.

Compute the out-degree of a node is O , we have to sum its row.

, we have to sum its row.

For the in-degree it is also O , we have to sum its column.

, we have to sum its column.

Adding an edge between two nodes is O .

.

Compute all paths of length 4 between any pair of nodes is O .

.

In this case, we store a matrix of size n × m, where n is the number of nodes and m is the number of edges:

It is also a bidimensional graph representation.

Rows represent nodes.

Columns represent edges.

A non-null entry represents that the node is incident to the edge, and in which mode (source or destination).

Example 4.6. The example is represented now as

| L1 | L2 | L3 | |

| v1 | dest | dest | |

| v2 | source | source | |

| v3 | dest | ||

| v4 | source | ||

Properties:

The storage is O .

.

Adjacent is O

is O

Neighbors is O

is O .

.

AdjacentEdges is O

is O .

.

Adding or deleting an edge between two nodes is O .

.

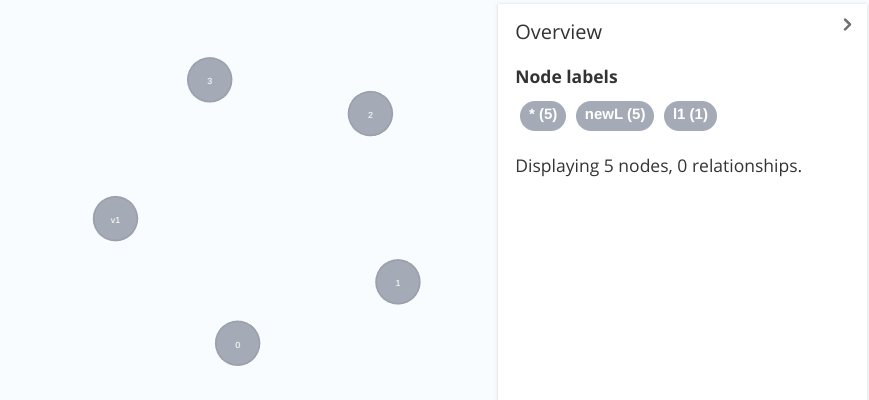

Neo4j is an open source graph DB system implemented in Java which uses a labelled attributed multigraph as data model. Nodes and edges can have properties, and there are no restrictions on the amount of edges between nodes. Loops are allowes and there are different types of traversal strategies defined.

It provides APIs for Java and Python and it is embeddable or server-full. It provides full ACID transactions.

Neo4j provides a native graph processing and storage, characterized by index-free adjacency, meaning that each node keeps direct reference to adjacent nodes, acting as a local index and making query time independent from graph size for many kinds of queries.

Another good property is that the joins are precomputed in the form of stored relationships.

It uses a high level query language called Cypher.

Graphs are stored in files. There are three different objects:

Nodes: they have a fixed length of 9 B, to make search performant: finding a node is O .

.

Its first byte is an in-use flag.

Then there are 4 B indicating the address of its first relationship.

The final 4 B indicate the address of its first property.

| inUse | ||||||||

| B | B | B | B | B | B | B | B | B |

Relationships: they have a fixed length of 33 B.

Its first byte is an in-use flag.

It is organized as double linked list.

Each record contians the IDs of the two nodes in the relationship (4B each).

There is a pointer to the relationship type (4 B).

For each node, there is a pointer to the previous and next relationship records (4 B x 2 each).

Finally, a pointer to the next property (4 B).

| inUse | ||||||||||||||||||||||||||||||||

| B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B |

Properties: they have a fixed length of 32 B divided in blocks og 8 B.

It includes the ID of the next property in the properties chain, which is thus a single linked list.

Each property record holds the property type, a pointer to the property index file, holding the property name and a value or a pointer to a dynamic structure for long strings or arrays.

| B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B | B |

Neo4j uses a cache to divide each store into regions, called pages. The cache stores a fixed number of pages per file, which are replaces using a Least Frequently Used strategy.

The cache is optimized for reading, and stores object representationsof nodes, relationships, and properties, for fast path traversal. In this case, node objects contain properties and references to relationships, while relationships contain only their properties. This is the opposite of what happens in disk storage, where most informaiton is in the relationship records.

Cypher is the high level query language used by Neo4j for creating nodes, updating/deleting information and querying graphs in a graph database.

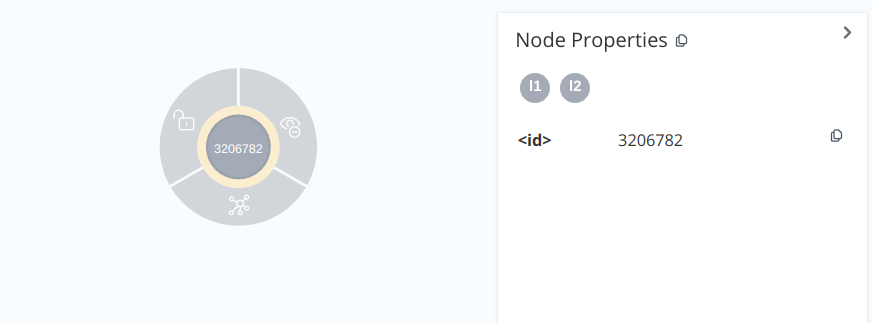

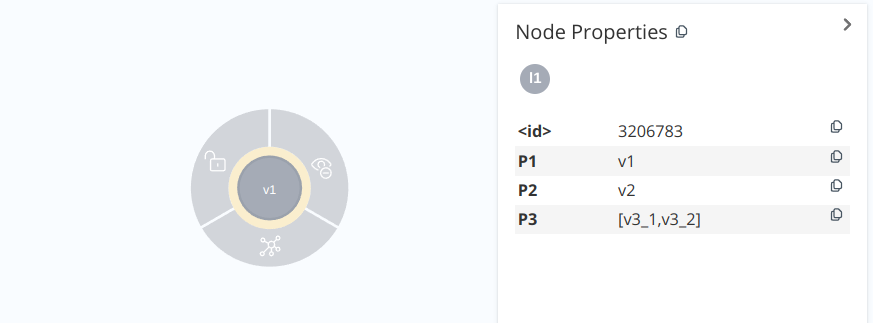

Its functioning is different from that of the relational model. In the relational model, we first create the structure of the database, and then we store tuples, which must be conformant to the structure. The foreign keys are defined at the structural level. In Neo4j, nodes and edges are directly created, with their properties, labels and types as structural information, but no schema is explicitly defined. The topology of the graph can be thought as analogous to the foreign key in the relational model, but defined at the instance level.

A node is of the form

where v is the node variable, which identifies the node in an expression, : l1 : ... : ln is a list of n labels associated

with the node, and  is a list of k properties associated with the node, and their respective assigned

values. Pi is the name of the property, vi is the value.

is a list of k properties associated with the node, and their respective assigned

values. Pi is the name of the property, vi is the value.

To create an empty node:

The ID is assigned internally, with a different number each time. It can be reused by the system but should not be used in applications: it should be considered an internal value to the system. RETURN is used to display the node:

To create a node with two labels:

To create a node with one label and 3 properties:

A query in Cypher is basically a pattern, which will be fullfilled solving the associated pattern-matching problem.

If we want to add a new label to all nodes previously created:

To delete a label from those nodes with a certain label:

A similar thing can be done with properties, which are referred to as node.propertyName:

An edge has the form

![(n) - [e : Type {P : v ,...,P : v }]- > (v)

1 1 k k](summary53x.png)

where n is the source node and v is the destination node. The edge is defined inside the brackets ![[]](summary54x.png) . It can also be

defined of the form

. It can also be

defined of the form  < -

< -![[]](summary56x.png) -

- . e identifies the edge and Type is a mandatory field prefixed by :. Finally, we have

again a list of k properties and their values.

. e identifies the edge and Type is a mandatory field prefixed by :. Finally, we have

again a list of k properties and their values.

Imagine we have 3 employees and want to create the relationship that one of them is the manager of the other two and a date as property. We can do that with:

As we have said, Cypher is a high level query language based on pattern matching. It queries graphs expressing informational or topological conditions.

MATCH: expresses a pattern that Neo4j tries to match.

OPTIONAL MATCH: is like an outer join, i.e., if it does not find a match, puts null.

WHERE: it must go together with a MATCH or OPTIONAL MATCH expression. No order can be assumed for the evaluation of the conditions in the clause, Neo4j will decide.

RETURN: the evaluation produces subgraphs, and any portion of the match can be returned.

RETURN DISTINCT: eliminates duplicates.

ORDER BY: orders the results with some condition.

LIMIT: returns only part of the result. Unless ORDER BY is used, no assumptions can be made about the discarded results.

SKIP: skips the first results. Unless ORDER BY is used, no assumptions can be made about the discarded results.

In addition:

If we don’t need to make reference to a node, we can use () with no variable inside.

If we don’t need to refer to an edge, we can omit it, like (n1)- ->(n2).

If we don’t need to consider the direction of the edge, we can use - -.

If a pattern matches more than one label, we can write the OR condition as | inside the pattern. For example, (n :l1|:l2) matches nodes with label l1 or label l2.

To express a path of any length, use [*]. For a fixed length m use [*m].

To indicate boundaries to the length of a path, minimum n and maximum m, use [*n..m]. To only limit one end use [*n..], [*..m].

Example 5.1. A page X gets a score computed as the sum of all votes given by the pages that references it. If a page Z references a page X, Z gives X a normalized vote computed as the inverse of the number of pages references by Z. To prevent votes of self-referencing pages, if Z references X and X references Z, Z gives 0 votes to X.

We are asked to compute the page rank for each web page. One possible solution is:

The first MATCH-WITH computes, for each node, the inverse of the number of outgoing edges, and passes this number on to the next clause. Now, for each of these p nodes, we look for paths of length 1 where no reciprocity exists.

Another solution uses COLLECT:

In this case, we are using the COLLECT to get the urls that points to x, but x does not point to them, in addition to just computing the value.

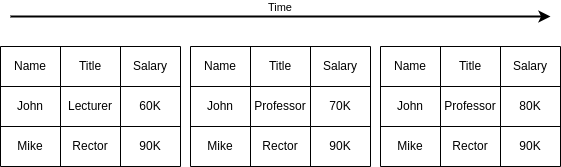

There are many applications in which temporal aspects need to be taken into account. Some examples are in the academic, accounting, insurance, law, medicine,... These applications would greatly benefit from a built-in temporal support in the DBMS, which would make application development more efficient with a potential increase in performance. In this sense, a temporal DBMS is a DBMS that provides mechanisms to store and manipulate time-varying information.

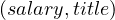

Example 6.1. A case study: imagine we have a database for managing personel, with the relation Employee(Name, Salary, Title, BirthDate). It is easy to know the salary of the employee, or its birthdate:

But it is often the case that we don’t only want to store the current state of things, but also a history. For instance, it can be interesting to store the employment history by extending the relation Employee(Name, Salary, Title, BirthDate, FromDate, ToDate). A dataset sample for this could be the following:

| Name | Salary | Title | BirthDate | FromDate | ToDate |

| John | 60K | Assistant | 9/9/60 | 1/1/95 | 1/6/95 |

| John | 70K | Assistant | 9/9/60 | 1/6/95 | 1/10/95 |

| John | 70K | Lecturer | 9/9/60 | 1/10/95 | 1/2/96 |

| John | 70K | Professor | 9/9/60 | 1/2/96 | 1/1/97 |

For the underlying system, the date columns FromDate and ToDate are no different from the BirthDate column, but it is obvious for us that the meaning is different: FromDate and ToDate need to be understood together as a period of time.

Now, to know the employee’s current salary, the query gets more complex:

Another interesting query that we can think of now is determining the salary history, i.e., all different salaries earned by the employee and in which period of time the employee was earning that salary. For our example, the result would be:

| Name | Salary | FromDate | ToDate |

| John | 60K | 1/1/95 | 1/6/95 |

| John | 70K | 1/6/95 | 1/1/97 |

But how can we achieve this?

One possibility is to print all the history, and let the user merge the pertinent periods.

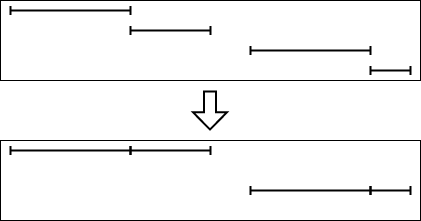

Another one is to use SQL as a means to perform this operation: we have to find those intervals that overlap or are adjacent and that should be merged.

One way to do this in SQL is performing a loop that merges them in pairs, until there is nothing else to merge:

This loop is executed logN times in the worst case, where N is the number of tuples in a chain of overlapping tuples. After the loop, we have to deleted extraneous, non-maximal intervals:

The same thing can be achieved by unifying everything using a single SQL expression as

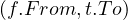

This is a complex query, and the logic is that if we want to merge the periods, we want to get a From and a To such that every period contained between From and To touches or intersect another period, and no period from outside From and To touches or interesects any of the periods inside:

such that such that | ∀x :   ∃y : ∃y :  | ||

| ∧ | |||

∄x :  ∨ ∨ |

Now, the ∀ cannot be used in SQL, but we can use the fact that ∀x : P ≡¬¬

≡¬¬ ≡¬

≡¬ ≡ ∄x : ¬P

≡ ∄x : ¬P ,

noting also that ¬

,

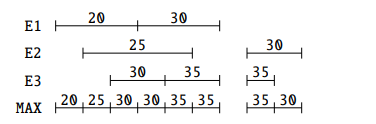

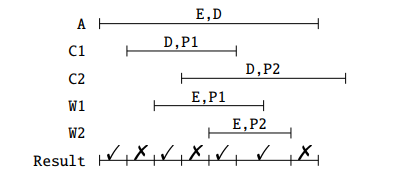

noting also that ¬ ≡ A∧¬B, and that is the query that we have shown above. An intuitive visualization is

the diagram in Figure 1.

≡ A∧¬B, and that is the query that we have shown above. An intuitive visualization is

the diagram in Figure 1.

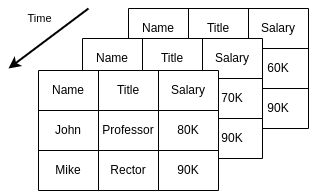

Another possibility to achieve this temporal features is to make the attributes salary and title temporal, instead of the whole table. For this, we can split the information of the table into three tables:

Employee(Name, BirthDate)

EmployeeSal(Name, Salary, FromDate, ToDate)

EmployeeTitle(Name, Title, FromDate, ToDate)

Now, getting the salary history is easier, because we only need to query the table EmployeeSal:

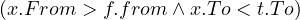

But, what if we want to obtain the history of the combinations of  ? We have to perform a temporal

join. Say we have the following tables:

? We have to perform a temporal

join. Say we have the following tables:

| Name | Salary | FromDate | ToDate |

| John | 60K | 1/1/95 | 1/6/95 |

| John | 70K | 1/6/95 | 1/1/97 |

| Name | Title | FromDate | ToDate |

| John | Assistant | 1/1/95 | 1/10/95 |

| John | Lecturer | 1/10/95 | 1/2/96 |

| John | Professor | 1/2/96 | 1/1/97 |

In this case, the answer to our temporal join would be:

| Name | Salary | Title | FromDate | ToDate |

| John | 60K | Assistant | 1/1/95 | 1/6/95 |

| John | 70K | Assistant | 1/6/95 | 1/10/95 |

| John | 70K | Lecturer | 1/10/95 | 1/2/96 |

| John | 70K | Professor | 1/2/96 | 1/1/97 |

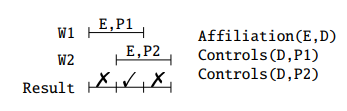

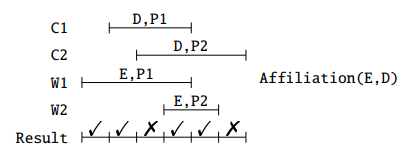

For these, again, we could print the two tables and let the user make the suitable combinations, but it feels better to solve the problem using SQL. The query can be done as:

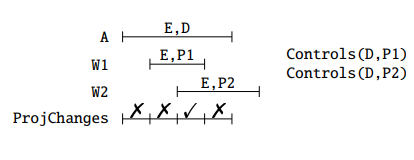

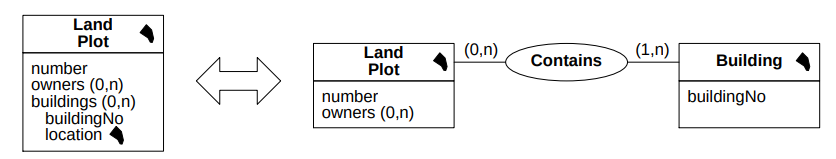

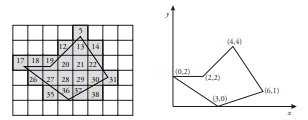

The four cases are depicted in Figure 2.

If we are using a system with embedded temporal capabilities, i.e., implementing TSQL2 (temporal SQL), we can let the system do it and just perform a join as we do it usually:

Time can be modelled in several ways, depending on the use case:

Linear: there is a total order in the instants.

Hypothetical: the time is linear to the past, but from the current moment on, there several possible timelines.

Directed Acyclic Graph (DAG): hypothetical approach in which some possible futures can merge.

Periodic/cyclic time: such as weeks, months... useful for recurrent processes.

We are going to assume a linear time structure.

Regarding the limits of the timeline, we can classify it:

Unbounded: it is infinite to the past and to the future.

Time origin exists: it is bounded on the left, and infinite to the future.

Bounded time: it is bounded on both ends.

Also, the bounds can be unspecified or specified.

We also need to consider the density of the time measures (i.e. what is an instant?). In this sense, we can classify the timeline as:

Discrete: the timeline is isomorphic to the integers. This means it is composed of a sequence of non-descomposable time periods, of some fixed minimal duration, named chronons and between a pair of chronons there is a finite number of chronons.

Dense: in this case, it is isomorphic to the ration numbers, with an infinite number of instants between each pair of chronons.

Continuous: the timeline is isomorphic to the real numbers, and again there is an infinite amount of instants between each pair of chronons.

Usually, a distance between chronons can be defined.

TSQL2 uses a linear time structure, bounded on both ends. The timeline is composed of chronons, which is the smallest possible granularity. Consecutive chronons can be grouped together into granules, giving multiple granularities and enable to convert from one another. The density is not defined and it is not possible to make questions in different granularities. The implementation is basically as discrete and the distance between two chronons is the amount of chronons in-between.

Temporal Types

Instant: a chronon in the time line.

Event: an instantaneous fact, something ocurring at an instant.

Event ocurrence time: valid-time instant at which the event occurs in the real world.

Instant set

Time period: the time between two instants (sometimes called interval, but this conflicts with the SQL type INTERVAL)

Time interval: a directed duration of time

Duration: an amount of time with a known length, but no specific starting or ending instants.

Positive interval: forward motion time.

Negative interval: backward motion time.

Temporal element: finite union of periods.

This way, in SQL92 we have the following Temporal Types:

DATE (YYYY-MM-DD)

TIME (HH:MM:SS)

DATETIME (YYYY-MM-DD HH:MM:SS)

INTERVAL (no default granularity)

And in TSQL2 we have:

PERIOD: DATETIME - DATETIME

The valid time of a fact is the time in which the fact is true in the modelled reality, which is independant of its recording in the database and can be past, present or future.

The transaction time of a fact is when the fact is current in the database and may be retrieved.

These two dimensions are orthogonal.

There are four types of tables:

Snapshot: these are usual SQL table, in which there is no temporality involved. What there is in the table is the current truth. They can be modified through time, but we only have access to the current truth.

Transaction Time: these tables are a set of snapshots tables, in which the past states can be queried for information, but they cannot be modified. When the current truth is modified, a snapshot is taken to preserved the history of changes.

Valid time: these are like transaction tables, but in which modification is permitted everywhere.

Bitemporal: in this case, we have valid time tables that can be taken snapshots to preserve full states.

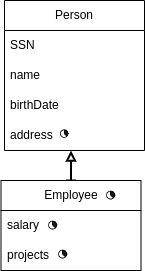

Conceptual modeling is important because it focuses on the application, rather than the implementation. Thus, it is technology independent, which enhances the portability and the durability of the solution. It is also user oriented and uses a formal, unambiguous specification, allowing for visual interfaces and teh exchange and integration of information.

Semantically powerful data structures.

Simple data model, with few clean concepts and standard well-known semantics.

No aritifical time objects.

Time orthogonal to data structures.

Various granularities.

Clean, visual notations.

Intuitive icons/symbols.

Explicit temporal relationships and integrity constraints.

Support of valid time and transaction time.

Past to future.

Co-existence of temporal and tradicional data.

Query languages.

Complete and precise definition of the model.

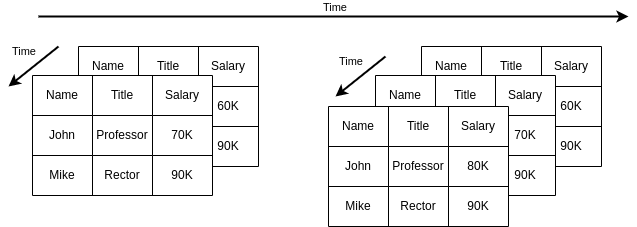

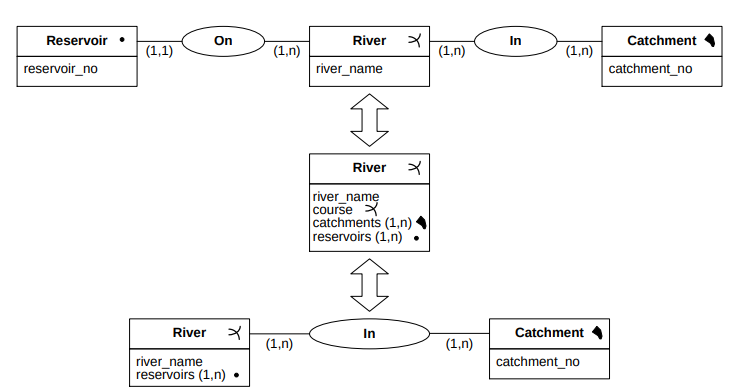

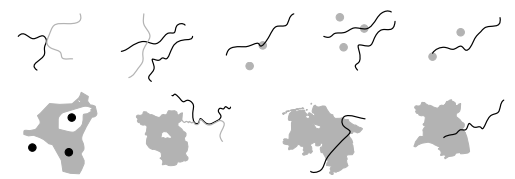

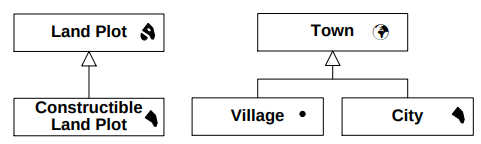

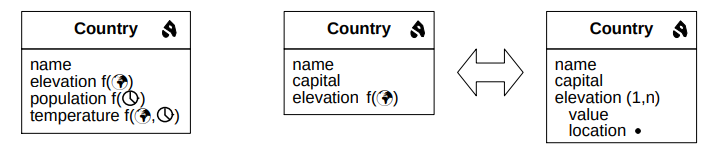

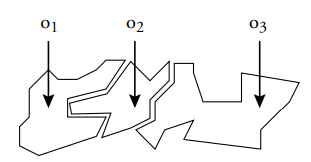

In Figure 3, we can see three different ways to model a schema. Note how they are different, because the temporal attributes are dealt with differently in each of the models.

Time, SimpleTime and ComplexTime are abstract classes.

A temporal object is marked with the symbol  , and it indicates that the object has a set of periods of validity

associated.

, and it indicates that the object has a set of periods of validity

associated.

For example, if we have the relation Employee (name, birthDate, address, salary, projects (1..n)),

then, an instance of the relation can be:

(name, birthDate, address, salary, projects (1..n)),

then, an instance of the relation can be:

|

Peter |

8/9/64 |

Rue de la Paix |

5000 |

{MADS, HELIOS} | [7/94-6/96] [7/97-6/98] Active |

| [7/96-7/97] Suspended | |||||

The red colored text are the life cycle information of the record.

The life cycle of an object can be continuous if there are no gaps between its creation and its deletion, or discontinuous if such gaps exist.

Non-temporal objects can be modeled with the absence of a lifecycle, or with a default life cycle which encodes that it is

always active. A usual option is to put it as ![[0,∞ ]](summary71x.png) .

.

The TSQL2 policy is that temporal operators are not allowed on non-temporal relations. So if we need to perform some kind of temporal operation in non-temporal relations, such as a join with a temporal relation, then we need to use the default life cycle.

A temporal attribute is marked with the symbol  , and it indicates that the attribute itself has a lifecycle

associated.

, and it indicates that the attribute itself has a lifecycle

associated.

For example, if we have the relation Employee(name, birthDate, address, salary , projects (1..n)),

then, an instance of the relation can be:

, projects (1..n)),

then, an instance of the relation can be:

|

Peter |

8/9/64 |

Rue de la Paix | 4000 | [7/94-6/96] |

{MADS, HELIOS} |

| 5000 | [6/96-Now] | ||||

It is also possible to have temporal complex attributes, meaning attributes with subattributes. The temporality can be in either the full attribute, in which case a lifecyle will be attached to the whole attribute, or to a subattribute, in which case the lifecycle will be attached to only the subattribute.

For example, can have the relation Laboratory(name, projects (1..n)  {name, manager, budget}) and

the relation Laboratory(name, projects (1..n) {name, manager

{name, manager, budget}) and

the relation Laboratory(name, projects (1..n) {name, manager  , budget}).

, budget}).

An example of the first relation is:

| LBD

| |

| {(MADS,Chris,1500)} | [1/1/95-31/12/95] |

| {(MADS,Chris,1500),(Helios,Martin,2000)} | [31/12/95-Now] |

An example of the second relation is:

| LBD | ||||||||||||||||||

{(MADS,

|

||||||||||||||||||

In this second case, if we update manager, we add one new element to the manager history. If we update the project name, we would simply change it, because project is not temporal.

Attribute types / timestamping: none, irregular, regular, instants, durations,...

Cardinalities: snapshot and DBlifespan.

Identifiers: snapshot or DBlifespan.

MADS has no implicit constraint, even if they make sense, such as:

The validity period of an attribute must be within the lifecycle of the object it belongs to.

The validity period of a complex attribute is the union of the validity periods of its components.

The life cycles are inherited from parent classes, and more temporal attributes can be added. For example:

In this case, Employee is temporal, even though its parent is not. Employee inherits the temporal attribute address.

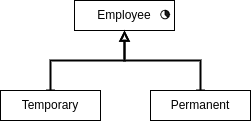

Another example:

In this case, both Temporary and Permanent are temporal objects.

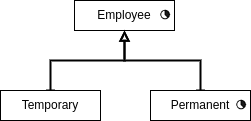

In the case in which the child is also declared as temporal, it is called dynamic temporal generalization and in that case the objects maintains two lifecycles: the one inherited from its parent, and the one defined on itself. For example:

In this case, Permanent employees have two lifecycles: their lifecycle as a permanent and the inherited lifecycle as an employee. The redefined life cycle has to be including the one inherited.

A temporal relationship is also marked with the symbol  , meaning that the relation between the objects possesses a

lifecycle.

, meaning that the relation between the objects possesses a

lifecycle.

Some usual constraints used in temporal relationships:

The validity period of a relationship must be within the intersection of the life cycles of the objects it links.

A temporal relationship can only link temporal objects.

Again, MADS does not impose any constraints.

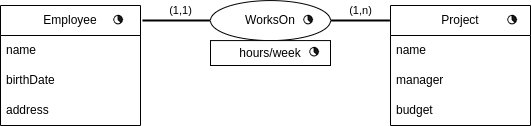

Example 8.2. Let’s see some temporal relationships.

In this case, a possible data can be:

| Employee | |||||||||||||||||

e1

|

|||||||||||||||||

e2

|

|||||||||||||||||

| WorksOn | ||||||||||||||||||

w1

|

||||||||||||||||||

w2

|

||||||||||||||||||

w2

|

||||||||||||||||||

| Project | |||||||||||||||||

p1

|

|||||||||||||||||

p2

|

|||||||||||||||||

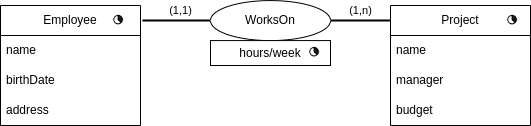

Another example, which is slightly different is the following:

In this case, the data can be:

| Employee | |||||||||||||||||

e1

|

|||||||||||||||||

e2

|

|||||||||||||||||

| WorksOn | |||||||||||||||

w1

|

|||||||||||||||

w2

|

|||||||||||||||

w2

|

|||||||||||||||

| Project | |||||||||||||||||

p1

|

|||||||||||||||||

p2

|

|||||||||||||||||

Now, only currently valid tuples are kept in the relationship. But for these, the history of hours/week is maintained.

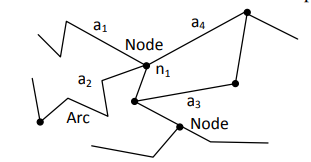

They describe temporal constraints between the life cycles of two objects and they are expressed with Allen’s operator extended for temporal elements:

before : interval i1 ends before i2 starts.

: interval i1 ends before i2 starts.

meets : interval i1 ends just when i2 starts.

: interval i1 ends just when i2 starts.

overlaps : intervals i1 and i2 overlaps at some point.

: intervals i1 and i2 overlaps at some point.

during : interval i1 is contained inside interval i2.

: interval i1 is contained inside interval i2.

starts : intervals i1 and i2 start at the same time.

: intervals i1 and i2 start at the same time.

finishes : intervals i1 and i2 finish at the same time.

: intervals i1 and i2 finish at the same time.

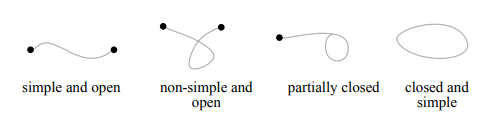

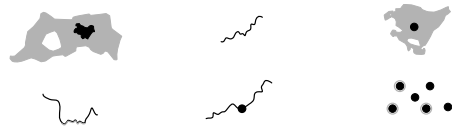

They can be visually seen in Figure

These relationships express a temporal constraint between the whole life cycles or the active periods.

Data type for periods is not available in SQL-92. Thus, a period is simulated with two Date columns: fromDate and toDate, indicating the beginning and end of the period, respectively. Some notes:

The special date ’3000-01-01’ denotes currently valid.

The periods are considered closed-open, [).

A table can be viewes as a compact representation of a sequence of snapshot tables, each one valid on a particular day.

Temporal statement apply to queries, modifications, views and integrity constraints. They are:

Current: applies to the current point in time. Example: what is Bob’s current position?

Time-sliced: applies to some point in time in the past or in the future. Example: what was Bob’s position on 1-1-2007?

Sequenced: applies to each point in time. Example: what is Bob’s position history?

Non-sequenced: applies to all points in time, ignoring the time-varying nature of tables. Example: when did Bob changed history?

Imagine we have the relation Incumbents(SSN,PCN,FromDate,ToDate) and we want to enforce that each employee has only one position at a point in time. If we use the key of the corresponding non-temporal table, (SSN,PCN), then we would not be able to enter the same employee for different periods. Thus, we need to somehow include the dates in the key. The options are: (SSN,PCN,FromDate), (SSN,PCN,ToDate) or (SSN,PCN,FromDate,ToDate). None of them captures the constraint that we want to enforce, because there are overlapping periods associated with the same SSN: we need a sequenced constraint, applied at each point in time. All constraints specified on a snapshot table have sequenced counterparts, specified on the analogous valid-time table.

Example 9.1. Employees have only one position at a point in time.

We have to decide how to timestamp current data. One alternative is to put NULL in the ToDate, which allows to identify current records by checking: WHERE ToDate IS NULL. But it possesses some disadvantages:

Users get confused with a data of NULL.

In SQL many comparisons with a NULL return false, so we might exclude some rows that should not be excluded from the query.

Other uses or NULL are not available.

Another approach is to set the ToDate to the largest value in the timestamp domain: ’3000-01-01’. The disadvantages of this are:

The DB states that something will be true in the far future.

’Now’ and ’Forever’ are represented in the same way.

There are different kind of duplicates in temporal databases:

Value equivalent: the values of the nontimestamp columns are equivalent. Example: all rows in Table 6.

Sequenced duplicates: when in some instant, the rows are duplicate. Example: rows 1 and 2 in the table.

Current duplicates: they are sequenced duplicates at the current instant. Example: rows 4 and 5 in the table.

Nonsequenced duplicates: the values of all columns are identical. Example: rows 2 and 3 in the table.

| SSN | PCN | FromDate | ToDate |

| 111223333 | 120033 | 1996-01-01 | 1996-06-01 |

| 111223333 | 120033 | 1996-06-01 | 1996-10-01 |

| 111223333 | 120033 | 1996-06-01 | 1996-10-01 |

| 111223333 | 120033 | 1996-10-01 | Now |

| 111223333 | 120033 | 1997-12-01 | Now |

To prevent value equivalent rows: we define a secondary key using UNIQUE(SSN,PCN).

To prevent nonsequenced duplicates: UNIQUE(SSN,PCN,FromDate,ToDate).

To prevent current duplicates: no employee can have two identical positions at the current time:

To prevent current duplicates, assuming no future data, we notice that current data will have the same ToDate, so we set UNIQUE(SSN,PCN,ToDate).

To prevent sequenced duplicates, we do as with the trigger for sequenced primary keys, but disregarding NULL values (because now we are not making a key UNIQUE+NOTNULL, but only UNIQUE):

To prevent sequenced duplicates, asumming only modifications to current data, we can use UNIQUE(SSN, PCN, ToDate).

Now, we want to enforce that each employee has at most one position. In a snapshot table, this would be equivalent to UNIQUE(SSN), and now the sequenced constraint is: at any time each employee has at most one position, i.e., SSN is sequenced unique:

We can also think about the nonsequenced constraint: an employee cannot have more than one position over two identical periods, i.e. SSN is nonsequenced unique: UNIQUE(SSN,FromDate,ToDate).

Or current constraint: an employee has at most one position now, i.e. SSN is current unique:

We want to enforce that Incumbents.PCN is a foreign key for Position.PCN. There several possible cases, depending on what tables are temporal.

In this case, if we want the PCN to be a current foreign key, the PCN of all current incumbents must be listed in the current positions:

Or it can be a sequenced foreign key:

A contiguous history is such that there are no gaps in the history. Enforcing contiguous history is a nonsequenced constraint, because it requires examining the table at multiple points of time.

We can also have the situation that Incumbents.PCN is a FK for Position.PCN and Position.PCN defines a contiguous history. In this case, we can omit the part of searching for the P2 that extends a P that ends in the middle of I.

Current FK:

We have the relations:

| SSN | FirstName | LastName | BirthDate |

| SSN | PCN | FromDate | ToDate |

| SSN | Amount | FromDate | ToDate |

| Position

| |

| PCN | JobTitle |

Say we want to obtain Bob’s current position. Then: